Cats and Dogs 2.1 (6 Conv layer architecture) with rotation

Hi,

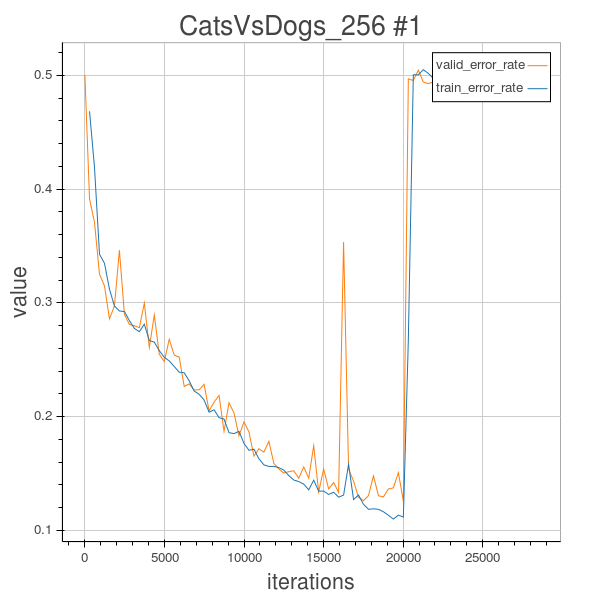

As mentioned in Blog posts 2.01, this time, in 2.1 I tried to use a 6 layered CNN to conduct experiment, but I encountered the same strange thing as encountered by Florian. I used Adam() as update rule to do learning. During training the training error and validation error suddenly diverged and totally ruined the training process.

In this experiment 2.1, I still use the Random2DRotation Method to rotate each training image by a random degree, and keep their label untouched, to do regularization, so the model will learn different dogs or cats’images with different random rotated angles. I wil try to set the initial parameters for Adam() rather than using its default values.

In this experiment, I’m using a 6-convolution layered CNN architecture.

The configurations are as follows:

num_epochs= 120

image_shape = (256,256)

filter_sizes = [(5,5),(5,5),(5,5),(5,5),(5,5),(5,5)]

feature_maps = [20,40,60,80,100, 120]

pooling_sizes = [(2,2),(2,2),(2,2)]

mlp_hiddens = [1000]

output_size = 2

weights_init=Uniform(width=0.2)

step_rule=Adam()

max_image_dim_limit_method= MaximumImageDimension

dataset_processing = rescale to 256*256