Cats and Dogs 2.01 (5 Conv layer architecture) with rotatation , validation error 19.6%

Hi,

As mentioned in Blog posts 1.3, I will try some deeper achitectures, so in this experiment 2.01 I tried to use a 5 layered CNN, and the configuration is showed as below.

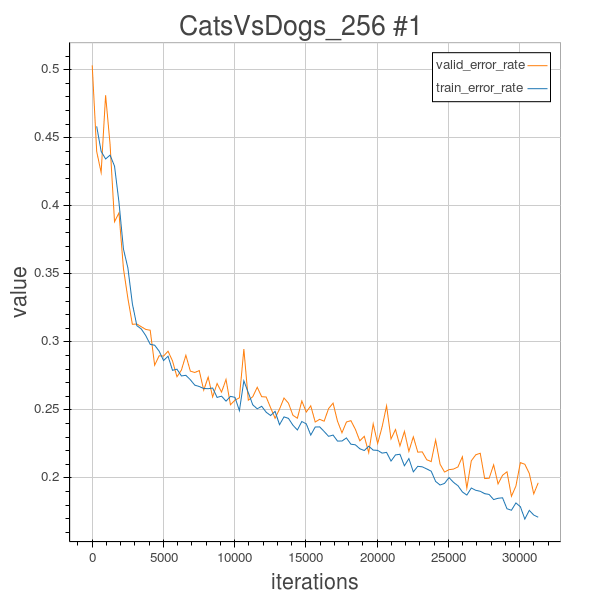

So in order to overcome the overfitting phenomenon, there are a lot of methods to regularize, in this experiment 2.01, I still use the Random2DRotation Method to rotate each training image by a random degree, and keep their label untouched, so the model will learn different dogs or cats’images with different random rotated angles, and as we can see from the learning curve of experiment 2.01, this does help to reduce the overfitting. Globally, the training error and validation error curves are sticked together, and the validation error after 100 epochs is 19.6%.

In this experiment, I’m using the same 5-convolution layered CNN architecture.

The configurations are as follows:

num_epochs= 100

image_shape = (256,256)

filter_sizes = [(5,5),(5,5),(5,5),(5,5),(5,5)]

feature_maps = [20,40,60,80,100]

pooling_sizes = [(2,2),(2,2),(2,2),(2,2),(2,2)]

mlp_hiddens = [1000]

output_size = 2

weights_init=Uniform(width=0.2)

step_rule=Adam()

max_image_dim_limit_method= MaximumImageDimension

dataset_processing = rescale to 256*256

But it seems a little bit strange because the validation error still stagnate at around 19.6%, should I train more epochs, or should I do other things to change the architecture, the processing of the images?

Next step I will try add a 6th conv layer and more feature maps at last conv layer to see if it can provide more information to the last MLP-softmax classification layer. And it might also be worthwhile to try other data augmentation schemes to regularize.